Here instances mean observationssamples. It worked but wasnt that efficient.

Boosting Algorithm Adaboost And Xgboost

However there are very significant differences under the hood in a practical sense.

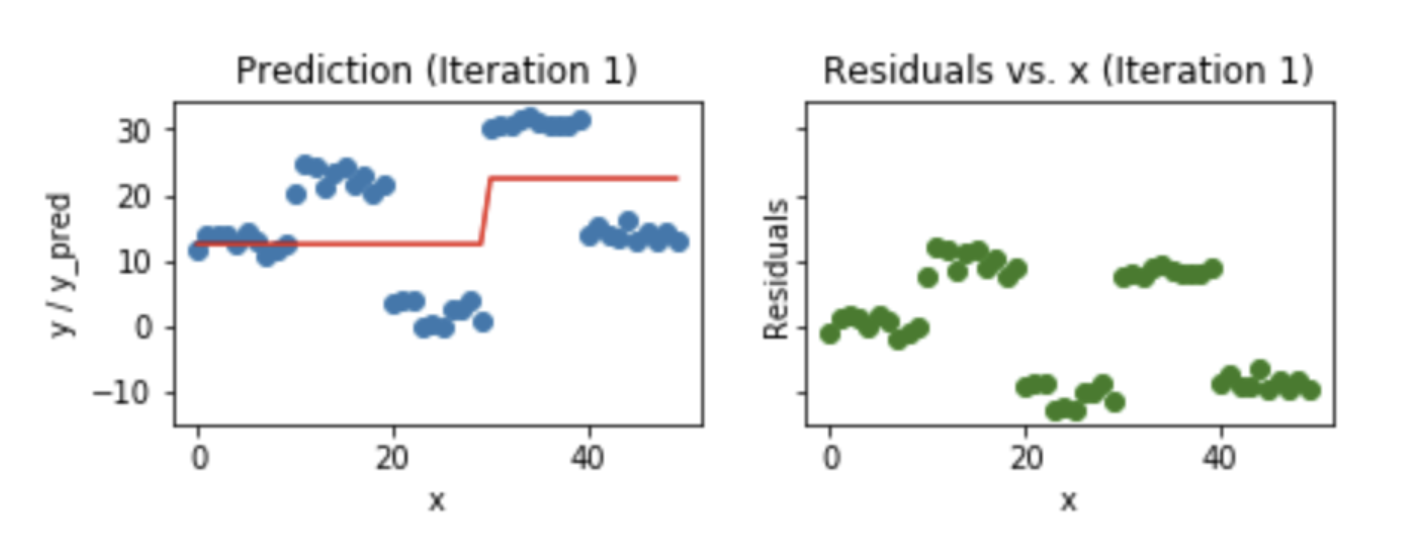

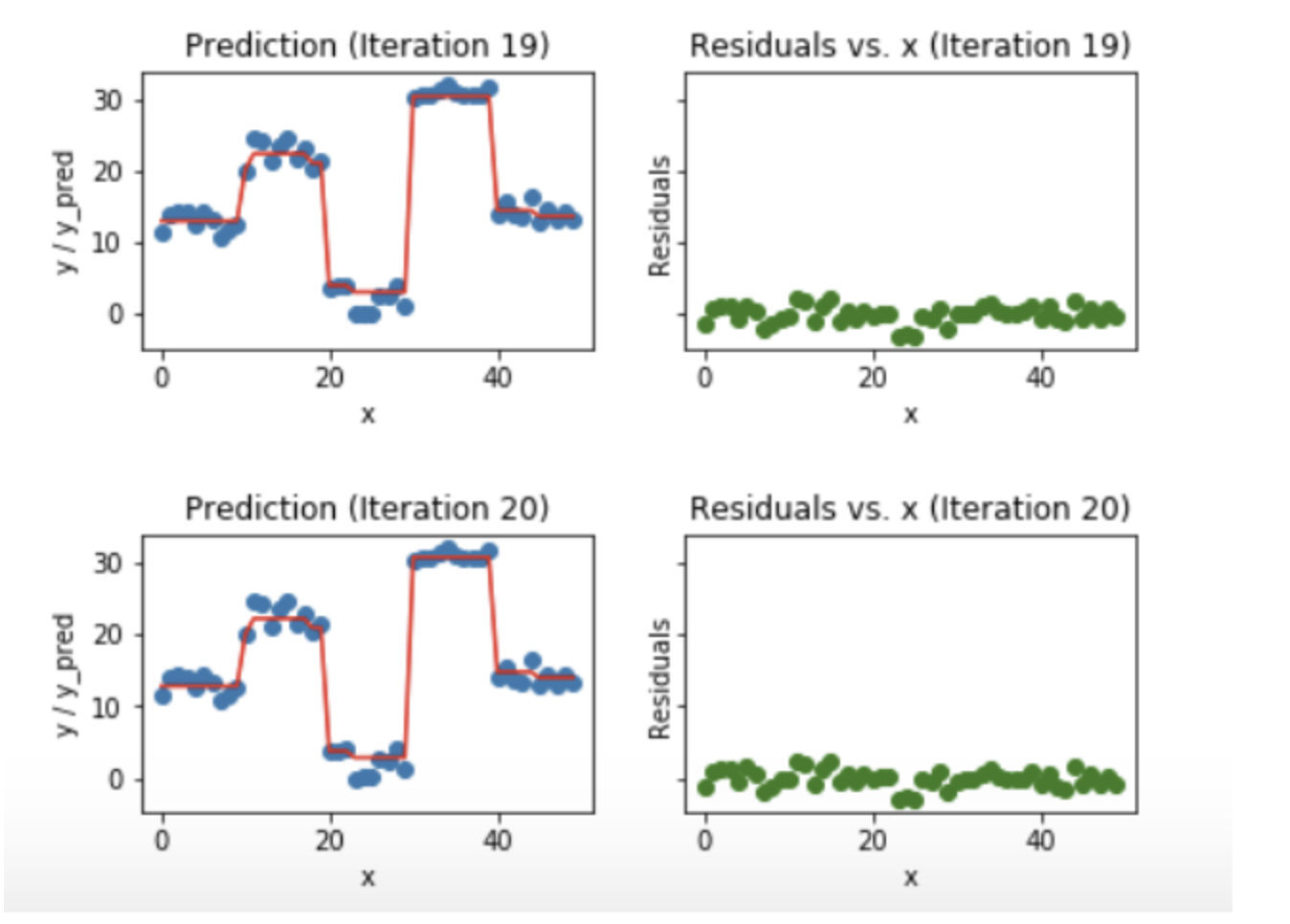

. XGBoost is faster than gradient boosting but gradient boosting has a wide range of applications. AdaBoost Adaptive Boosting AdaBoost works on improving the. Boosting is the process of building decision trees sequentially to minimize errors.

There is a technique called the Gradient Boosted Trees whose base learner is CART Classification and Regression Trees. XGBoost uses advanced regularization L1 L2 which improves model generalization capabilities. 3 rows XGBoost is one of the most popular variants of gradient boosting.

It is based on gradient boosted decision trees. They work well for a class of problems but they do. EXtreme Gradient BoostXGBoost is a decision tree-based ensemble method which makes use of gradient boostingThis post provides an introduction to the XGBoost technique.

Gradient Boosting Decision trees. AdaBoost Gradient Boosting and XGBoost. Gradient boosting only focuses on the variance but not the trade off between bias where as the xg boost can also focus on the regularization factor.

In this article Ill summarize each introductory paper. Boosting is a method of converting a set of weak learners into strong learners. R package gbm uses gradient boosting by default.

However the efficiency and scalability are still unsatisfactory when there are more features in the data. Show activity on this post. Its training is very fast and can be parallelized distributed across clusters.

You are correct XGBoost eXtreme Gradient Boosting and sklearns GradientBoost are fundamentally the same as they are both gradient boosting implementations. Two modern algorithms that make gradient boosted tree models are XGBoost and LightGBM. GBM uses a first-order derivative of the loss function at the current boosting iteration while XGBoost uses both the first- and second-order derivatives.

In this algorithm decision trees are created in sequential form. The Gradient Boosters V. Gradient Boosting Decision Trees GBDT are currently.

Well compare XGBoost LightGBM and CatBoost to the older GBM measuring accuracy and speed on four fraud related datasets. Gradient boosting decision trees is the state of the art for structured data problems. XGBoostExtreme Gradient Boosting is a gradient boosting library in python.

It has quite effective implementations such as XGBoost as many optimization techniques are adopted from this algorithm. Gradient Boosting is also a boosting algorithm hence it also tries to create a strong learner from an ensemble of weak learners. While XGBoost and LightGBM reigned the ensembles in Kaggle competitions another contender took its birth in Yandex the Google from Russia.

Answer 1 of 2. It is a decision-tree-based. It decided to take the path less tread and took a different approach to Gradient Boosting.

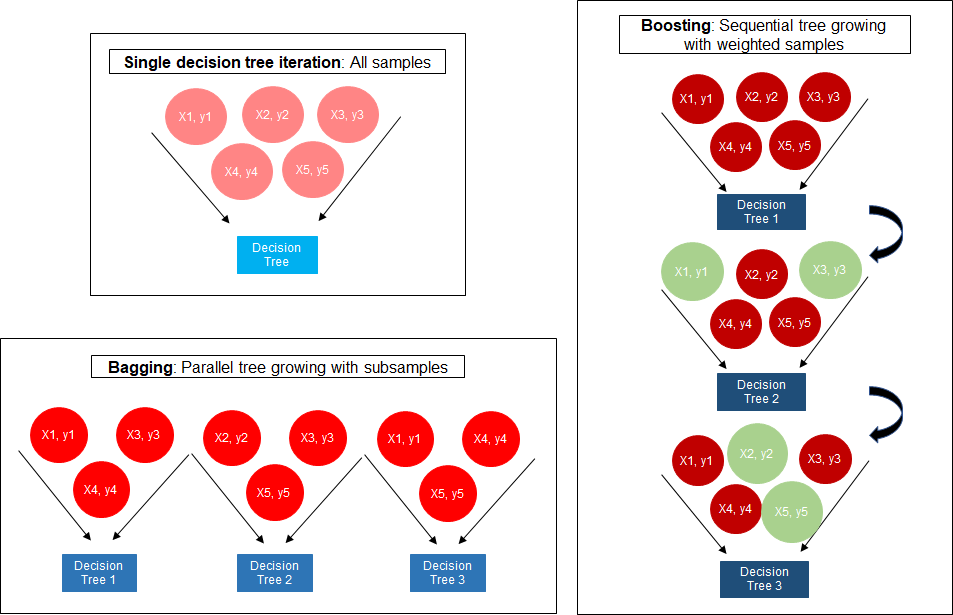

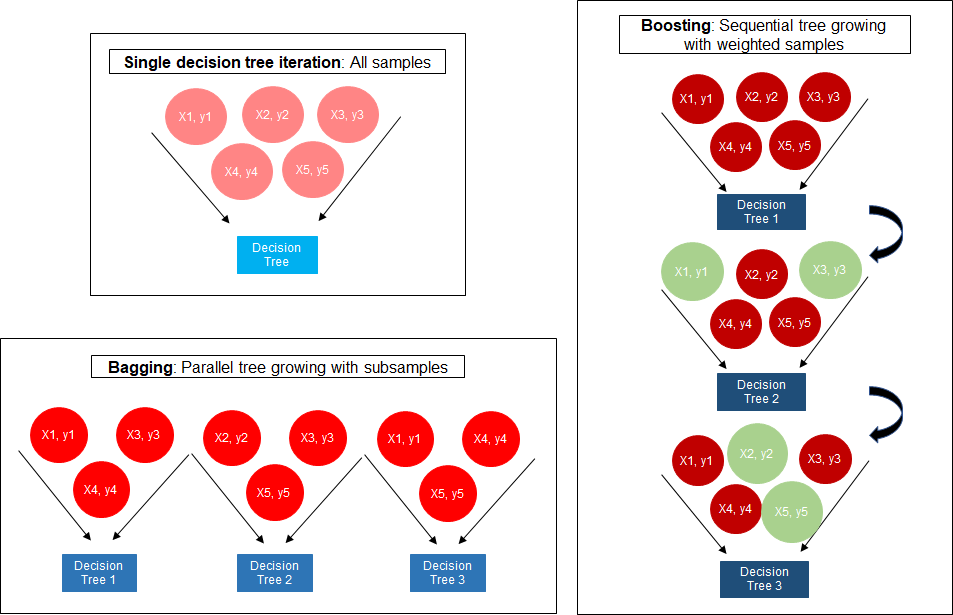

You should check out the concept of bagging and boosting. Originally published by Rohith Gandhi on May 5th 2018 41943 reads. Gradient Boosting Decision Tree GBDT is a popular machine learning algorithm.

XGBoost XGBoost is an implementation of Gradient Boosted decision trees. Gradient Boosting was developed as a generalization of AdaBoost by observing that what AdaBoost was doing was a gradient search in decision tree space aga. At each boosting iteration the regression tree minimizes the least squares approximation to the.

XGBoost trains specifically the gradient boost data and gradient boost decision trees. Generally XGBoost is faster than gradient boosting but gradient boosting has a wide range of application XGBoost from xgboost import XGBClassifier clf XGBClassifier n_estimators 100. Decision tree based algorithms are considered best for smallmedium structured or tabular data.

XGBoost is preferred over gradient boosting in general because it consistently delivers better results and because its faster as demonstrated by the following case study. Difference between Gradient boosting vs AdaBoost Adaboost and gradient boosting are types of ensemble techniques applied in machine learning to enhance the efficacy of week learners. Before exploring the concept of gradient boosting lets look into boosting.

A prior understanding of gradient boosted trees is useful. One important difference between the two is that the predictors used in Random forest are independent of each other whereas the ones used in gradient boosting machines are built sequentially where each one tries to improve upon the mistakes made by its predecessor. We can use XGBoost to train the Random Forest algorithm if it has high gradient data or we can use Random Forest algorithm to train XGBoost for its specific decision trees.

LightGBM uses a novel technique of Gradient-based One-Side Sampling GOSS to filter out the data instances for finding a split value while XGBoost uses pre-sorted algorithm Histogram-based algorithm for computing the best split. AdaBoost is the original boosting algorithm developed by Freund and Schapire. First let us understand how pre-sorting splitting works-.

The algorithm is similar to Adaptive BoostingAdaBoost but differs from it on certain aspects. XGBoost computes second-order gradients ie. AdaBoost Gradient Boosting and XGBoost are three algorithms that do not get much recognition.

The concept of boosting algorithm is to crack predictors successively where every subsequent model tries to fix the flaws of its predecessor. They sought to fix a key problem as they see it in all the other GBMs in the. The different types of boosting algorithms are.

XGBoost delivers high performance as compared to Gradient Boosting. Whats their special. The latter is also known as Newton boosting.

The training methods used by both algorithms is different. XGBoost models majorly dominate in many Kaggle Competitions. I think the difference between the gradient boosting and the Xgboost is in xgboost the algorithm focuses on the computational power by parallelizing the tree formation which one can see in this blog.

XGBoost is more regularized form of Gradient Boosting. Neural networks and Genetic algorithms are our naive approach to imitate nature. Well also present a concise comparison among all new algorithms allowing you to quickly understand the main differences between each.

It is a boosting algorithm which is used in various competitions like kaggle for improving the model accuracy and robustness. Approaching big data gradient boosting versus XGBoost In the real world datasets can be enormous with trillions of data points.

A Comparitive Study Between Adaboost And Gradient Boost Ml Algorithm

Gradient Boosting And Xgboost Note This Post Was Originally By Gabriel Tseng Medium

Xgboost Versus Random Forest This Article Explores The Superiority By Aman Gupta Geek Culture Medium

Gradient Boosting And Xgboost Hackernoon

The Intuition Behind Gradient Boosting Xgboost By Bobby Tan Liang Wei Towards Data Science

Light Gbm Vs Xgboost Which Algorithm Takes The Crown

The Ultimate Guide To Adaboost Random Forests And Xgboost By Julia Nikulski Towards Data Science

0 comments

Post a Comment